A "voice" for individuals with Cerebral Palsy

"A world where you can't say how you feel or what you need, that's the world for most of the people with cerebral palsy, some of them can only move a finger or an arm."

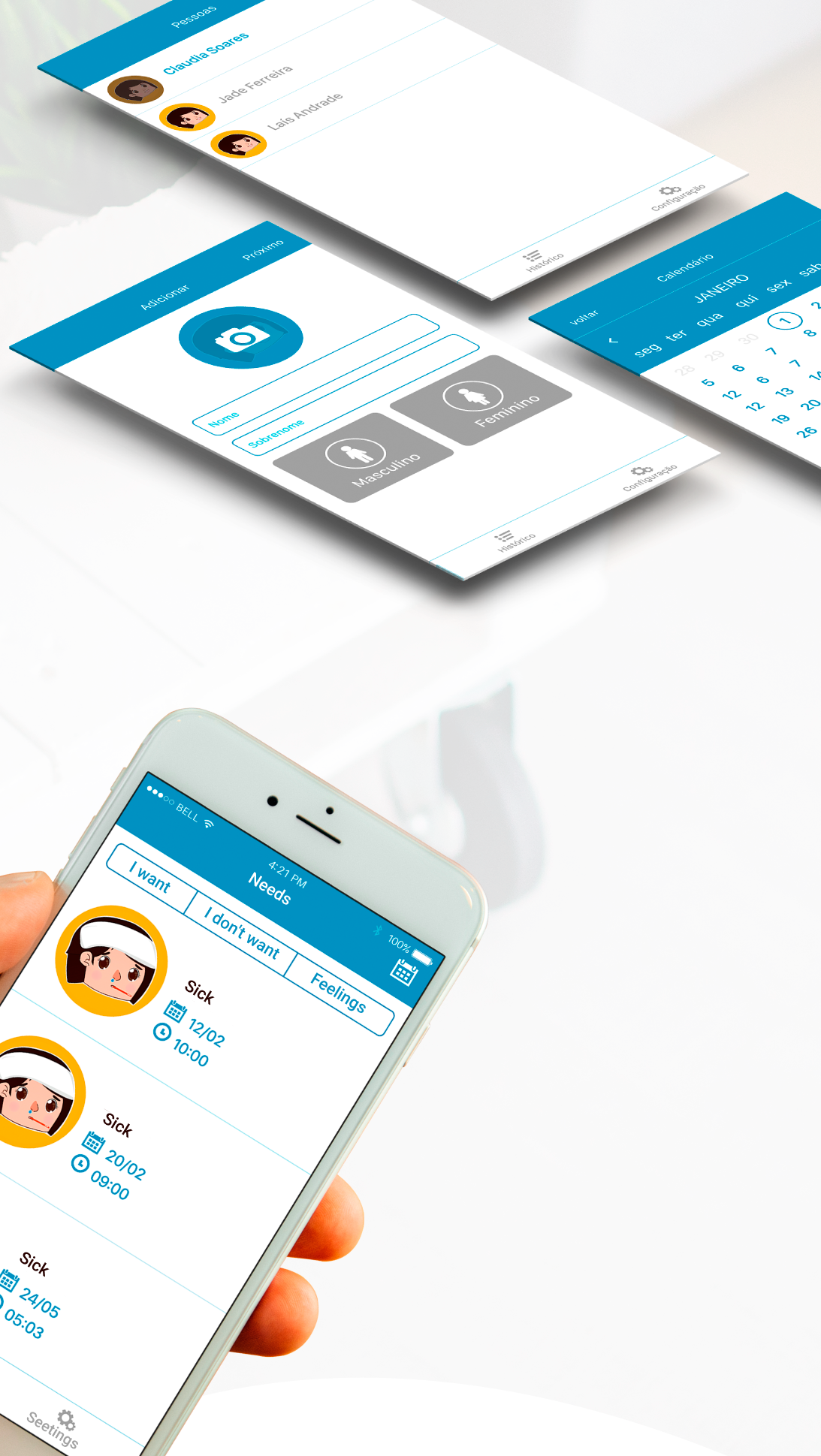

My role: Initial research, create the workflow, illustration and defined the interface interaction of both platforms.

Year: 2015

Platform: iOS (iPad and iPhone)

Research

The most difficult part was to understand and empathize with them, it's hard to imagine all the struggles individuals with cerebral palsy have and create an interface that could understand most of them - it took a while to have a first version.

The challenge was to make it simple, easy to interact with less effort as possible.

We did a MVP version based on research, academic articles and initial hypothesis and tested it with them and psychologists.

What we learned from the test:

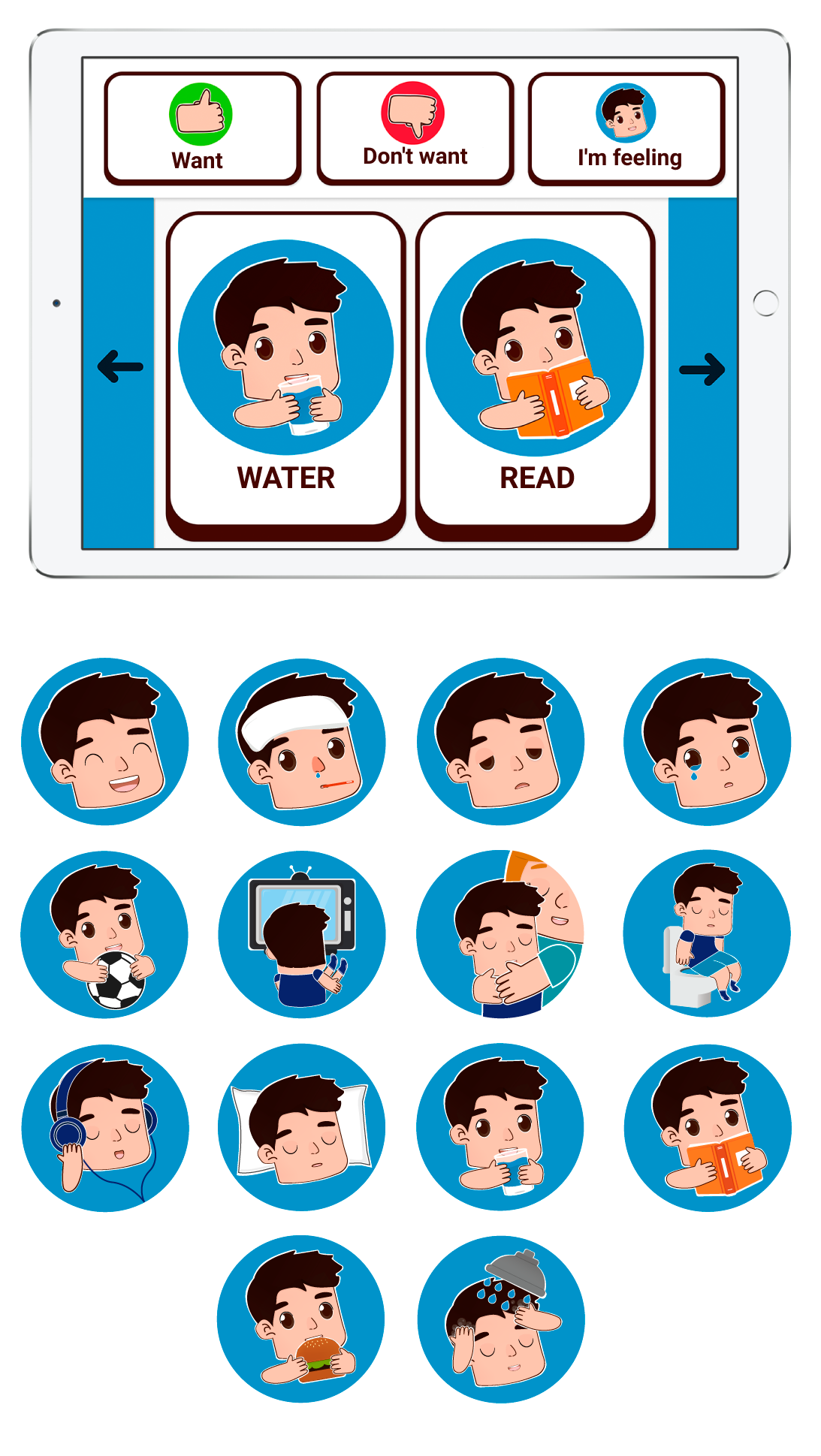

- Minimalist icons don't work with them, they need to have an avatar that represents actions (like AAC cards).

- The touch area need to be accurate, avoiding motor coordination erros.

So we made the final version:

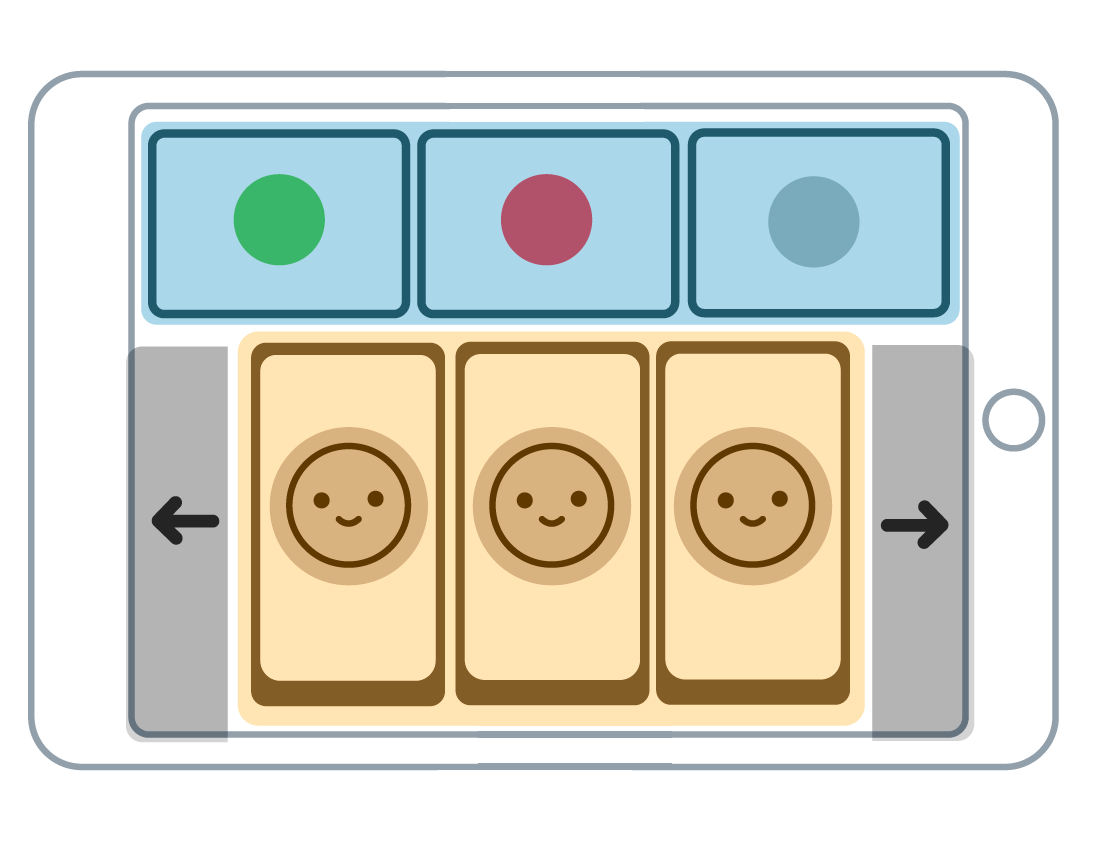

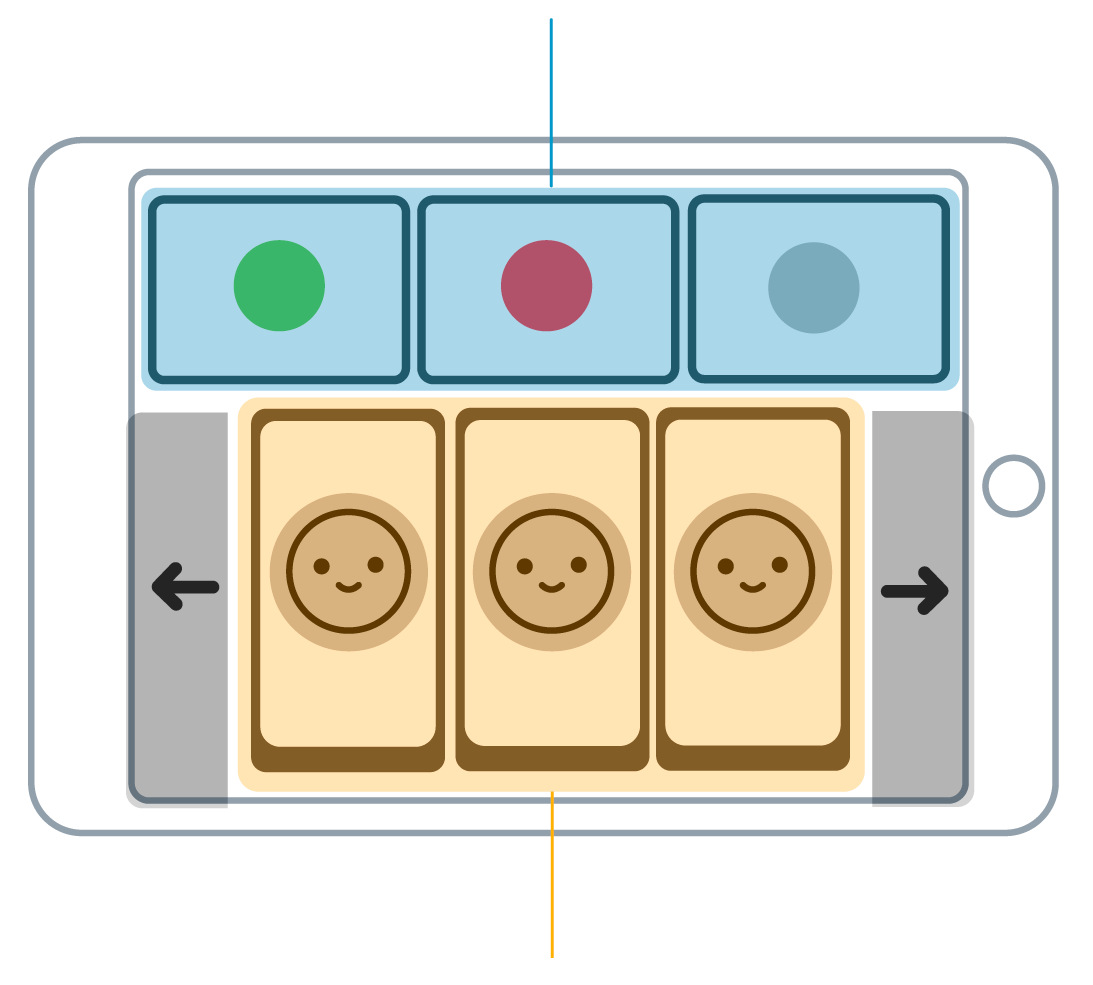

Information hierarch

Top options

The main interactions defined are I want, I don't want, I'm feeling, they were placed at the top for fast hierarchical understand and distinguish with colors.

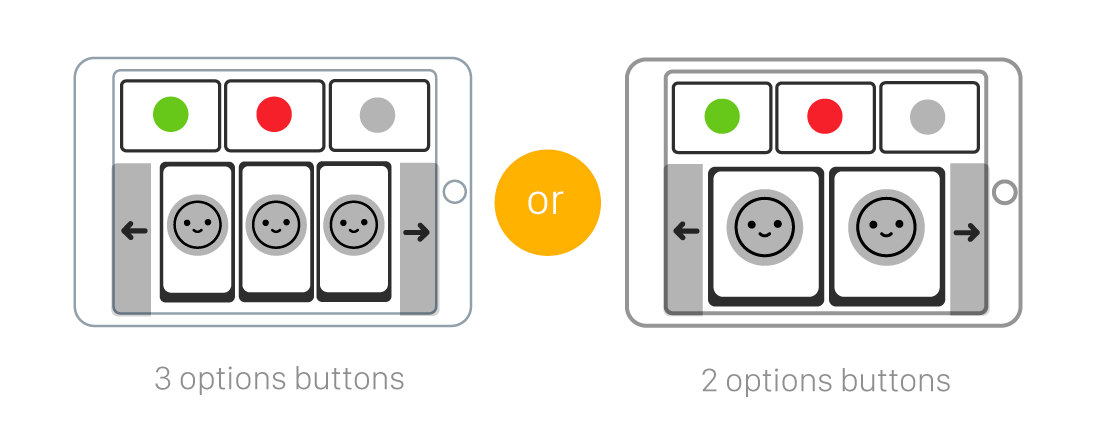

Bellow options

For a better touch and view of the options 3 is the maximum on this part of the screen, but the caregiver or parent can modify this number with the iPhone app.

Emotional character

Speak thru someone is much better than speak thru a machine, so a character was created and applied accordingly to the context.

All the characters go along with text, following psychologist instructions for help them learn similarity between action and the word.

The iPhone version

This version was made for the parent/caregiver, where it is possible to have access of settings and personalize the iPad screen, see a historic of the actions made, this is helpful for medical consultations and follow the emotional variations.

The main goals of Laví:

- For cerebral palsy individuals: more independence, the possibility to communicate, "speak", inclusion.

- For the parent/caregiver: keep up with the individual routine and feelings, better communication and therefore a better relationship.